Fostering AI literacy as students, teachers, and researchers

Dr Lynette Pretorius

Contact details

Dr Lynette Pretorius is an award-winning educator and researcher specialising in doctoral education, academic identity, student wellbeing, AI literacy, autoethnography, and research skills development.

Credit: This blog post is an adapted form of a recent paper I wrote.

Artificial intelligence (AI) has been present in society for several years – think, for example, of computer grammar-checking software, autocorrect on your phone, or GPS apps. Recently, however, there has been a significant advancement in AI research with the development of generative AI technologies like ChatGPT. Generative AI refers to technologies which can perform tasks that require creativity by using computer-based networks to create new content based on what they have previously learnt.

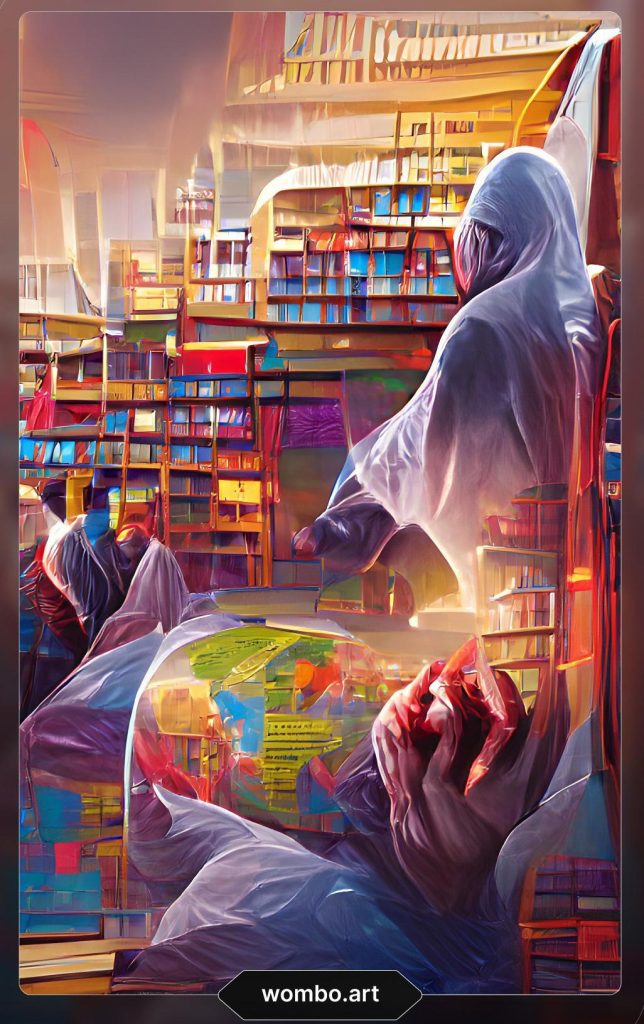

For example, generative AI technologies now exist which can write poetry or paint a picture. Indeed, I entered the title of one of my published books (Research and Teaching in a Pandemic World) into a generative AI which paints pictures (Dream by WOMBO). The response it generated accurately represented the book’s content, was eye-catching, and I believe it would have been a very suitable picture for its cover. Check it out:

(Note: This response was generated by Dream by WOMBO (WOMBO Studios, Inc., https://dream.ai/) on December 12, 2021 by entering the prompt “research and teaching in a pandemic world” into the generator and selecting a preferred style of artwork.)

The introduction of generative AI has, however, led to a certain amount of panic among educators; many workshops, discussions, policy debates, and curriculum redesign sessions have been run, particularly in the higher education context. Educators acknowledge that there is a need to accept that generative AI can also be leveraged to support student learning. In fact, it is clear that students will likely be expected to know how to use this technology when they enter the workforce. Importantly, though, there has also been significant concern that generative AI would encourage students to cheat. For example, many educators fear that students could enter their essay topic into a generative AI and that it would generate an original piece of work for them which would meet the task requirements to pass.

I believe what is missing from these discussions regarding generative AI is the fact that assessment regimes focus predominantly on the product of learning. This focus assumes that the final assignment is indicative of all the student’s learning but neglects the importance of the learning process. This is where generative AI can be a valuable tool. From this perspective, the technology should be considered as an aide, with the intellectual work of the user lying in the choice of an appropriate prompt, the assessment of the suitability of the output, and subsequent modification of that prompt if the output does not seem suitable. Some examples of the use of generative AIs as an aide include helping students develop an outline or brainstorm ideas for an assignment, providing feedback to students on their work, guiding students in learning how to improve the communication of their ideas, and acting as an after-hours tutor or a way for English-language learners to improve their written skills. Using generative AI in this more educative manner can help students better engage with the process of their learning.

In a similar way to when Microsoft Word first introduced a spell-checker, I believe generative AI will become part of our everyday interactions in a more digitally connected and inclusive world. Importantly, though, as mentioned above, while generative AI may help the user create something, it is dependent on the user providing it with appropriate prompts to be effective. The user is also responsible for evaluating the accuracy or usefulness of what is generated. As such, we need to teach students how to communicate effectively and collaboratively with generative AI technologies, as well as evaluate the trustworthiness of the results obtained – a concept termed AI literacy. I believe AI literacy is likely to soon become a key graduate attribute for all students as we move into a more digital world which integrates human and non-human actions to perform complex tasks.

It appears that my university has come to the same conclusion. Monash University’s generative AI policy notes that students and researchers at Monash University are allowed to use generative AI, provided that appropriate acknowledgement is made in the text to indicate what role the generative AI played in creating the final product. The University has also created a whole range of resources which are freely accessible to students and the wider public to help them learn how to use generative AI ethically. I have recently developed a video (Using generative artificial intelligence in your assignments and research) that explains what generative AI is and what it can be used for in assignments and research.

In my teaching practice, I now advise students to use generative AI as a tool to help them improve their approaches to their assignments. I suggest, in particular, that generative AI can be used as a tool to start brainstorming and planning for their assignment or research project. I include examples of how generative AI can be used for various purposes in my classes. For example, I highlight that generative AI may be able to assist a researcher in generating some starting research questions, but it is the researcher’s responsibility to refine these questions to reflect their particular research focus, theoretical lens, and so on. I emphasise to students that generative AI will not do all the work for them; they need to understand that they are still responsible for deciding what to do with the information, linking the ideas together, and showing deeper creativity and problem-solving in the final version of their work.

I have recently showcased this approach in a video which is freely available on YouTube. The first video (Using generative artificial intelligence in your assignments and research) explains what generative AI is and what it can be used for in assignments and research. The second video (Using generative AI to develop your research questions) showcases a worked example of how I collaborated with a generative AI to formulate research questions for a PhD project. These videos can be reused by other educators as needed.

This video starts by showing students how I have used ChatGPT to brainstorm a starting point for a research project by asking it to “Act as a researcher” and list the key concerns of doctoral training programmes. In this way, I show the students the importance of prompt design in the way they collaborate with the generative AI. In the video, I show that ChatGPT provided me with a list of seven core concerns and note that, using my expertise in the field, I have evaluated these concerns and can confirm that they are representative of the thinking in the discipline. In the rest of the video, I showcase how I can continue my conversation with the generative AI by asking it to formulate a research question that investigates the identified core concerns. I show students how I collaborated with the generative AI to refine the research question until, in the end, a good quality question is developed which incorporates the specificity and theoretical positioning necessary for a PhD-level research question.

It is important to note that students are likely not yet experts in their field when they are designing their research questions. Therefore, it is important to provide them with guidance as to how to evaluate the ideas produced by generative AI. This includes highlighting that a generative AI is not always accurate, that it may disregard some information which may be pertinent to a specific research project, or that it may fabricate information. Students need to learn that a generative AI is not a tool similar to an encyclopedia which contains all the correct information. Rather, generative AI is a tool which responds to prompts by generating answers it “thinks” would be appropriate in that particular context. Consequently, I advise students to use generative AI as a starting point, but that they should then explore the literature to further assess the accuracy of the core concerns identified earlier as well as the viability of the research question for their project.

It is also worth noting that generative AI could be used as a way to help students see what a good research question might look like, rather than using it specifically to develop a research question for their particular research project. Generative AI may also be useful in helping students see how to organise the themes in the literature. In this way, we encourage students to use generative AI as part of the learning process, allowing them to scaffold their skills so that they can use their creativity and other higher-order thinking skills to further advance knowledge in their discipline.

Students should also be taught how to appropriately acknowledge the use of generative AI in their work. Monash University has provided template statements for students to use. I use these template statements as part of my regular workshops. In this way, I show students that ethical practice is to acknowledge which parts of the work the generative AI did and which parts of the work were done by a person.

I have also recently used such an acknowledgement in one of my research papers. I have included it below for other researchers to use in their work.

I acknowledge that I used ChatGPT (OpenAI, https://chat.openai.com/) to generate an initial draft outline of the introduction of this manuscript. The prompt provided for this outline was “Act as a social science researcher and write an outline for a paper advocating for change to survey design to collect more diverse participant information”. I adapted the outline it produced for the introduction to reflect my own argument, style, and voice. This section was also significantly adapted through the peer review process. As such, the final version of the manuscript does not include any unmodified content generated by ChatGPT.

As with all new technologies, there are potential challenges and risks that should be considered. Firstly, generative AI technologies can generate results which seem correct but are factually inaccurate or entirely made up. Secondly, there is the issue of equity of access. It is incumbent upon us as educators to ensure that all students have equal access to the technologies they may be required to use in the classroom. Thirdly, there is the risk that the generative AI may learn and reproduce biases present in society. Finally, for researchers, there are also ethical concerns relating to the retention and possible generation of potentially sensitive data.

Generative AI is, at its core, a natural evolution of the technology we already use in our daily practices. In an ever-increasingly digital world, generative AI will become integral to how we function as a society. It is, therefore, incumbent upon us as educators to teach our students how to use the technology effectively, develop AI literacy, and use their higher-order thinking and creativity to further refine the responses they obtain. I believe that this form of explicit modelling is how we, as educators, can help students develop an understanding of generative AI as a tool to improve their work. In this way, we focus on the process of learning, rather than being so focused on the ultimate product for assessment.

Questions to ponder

How do you think AI literacy can be integrated into current educational curricula to enhance learning while ensuring academic integrity? What are the potential challenges and benefits of incorporating generative AI into classroom settings?

How should students and researchers navigate the ethical implications of using AI-generated content in their assignments and research?

Pingback: Accurately assessing students’ use of generative AI acknowledgements in assignments – Dr Lynette Pretorius

Pingback: Developing AI literacy in your writing and research – Dr Lynette Pretorius

Pingback: Developing AI literacy in your writing and research – The AI Literacy Lab

Pingback: Accurately assessing students’ use of generative AI acknowledgements in assignments – The AI Literacy Lab

Pingback: Learn the basics of generative AI – Dr Lynette Pretorius